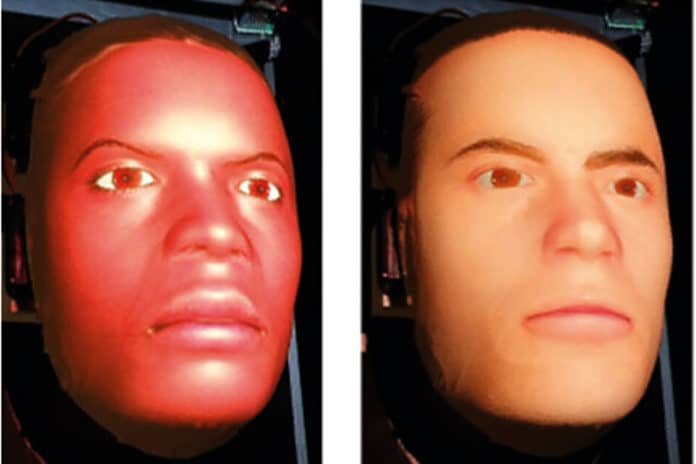

Medical training simulators can provide safe, controlled, and effective learning environments for medical students to practice hands-on physical examination skills. Most existing robotic medical training simulators that can capture physical examination behaviors in real‑time cannot display facial expressions, which is an important source of information for physicians. Also, they comprise a limited range of patient identities in terms of ethnicity and gender.

A team led by researchers at Imperial College London has developed a way to generate more accurate expressions of pain on the face of medical training robots during the physical examination of painful areas. The robotic system can simulate facial expressions of pain in response to palpations, displayed on a range of patient face identities. This could help teach trainee doctors to use clues hidden in patient facial expressions to minimize the force necessary for physical examinations. The technique could also help to detect and correct early signs of bias in medical students by exposing them to a wider variety of patient identities.

“Improving the accuracy of facial expressions of pain on these robots is a key step in improving the quality of physical examination training for medical students,” said the study author Sibylle Rérolle from Imperial’s Dyson School of Design Engineering.

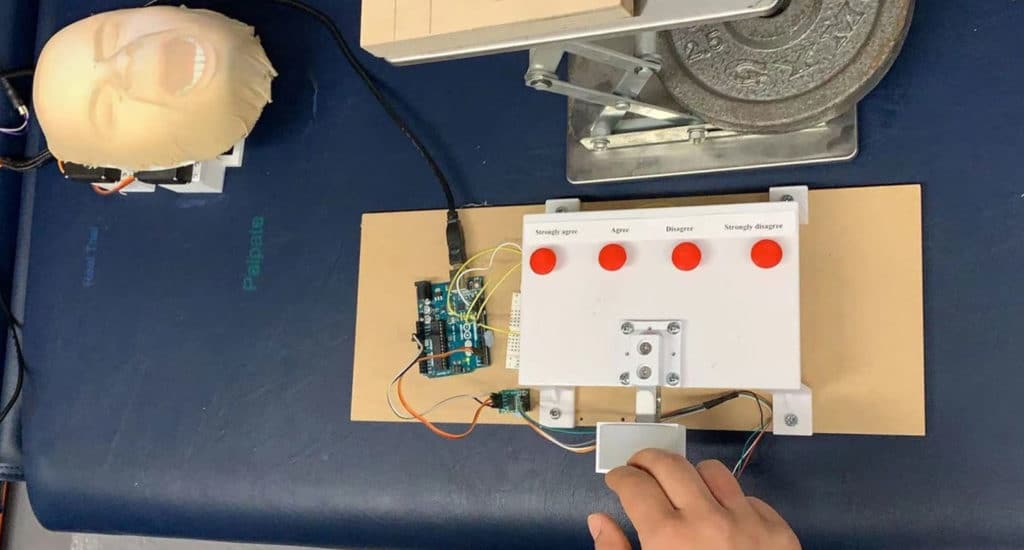

In the study, undergraduate students were asked to perform a physical examination on the abdomen of a simulated patient, which triggered the real‑time display of six pain-related facial Action Units (AUs) on a robotic face (MorphFace). The researchers found that the most realistic facial expressions happened when the upper face AUs were activated first, followed by the lower face AUs. In particular, a longer delay in activation of the Jaw Drop AU produced the most natural results.

The team also found that how participants perceived the pain of the robotic patient was dependent on the gender and ethnic differences between the participant and the patient and that these perception biases affected the force applied during physical examination.

“Previous studies attempting to model facial expressions of pain relied on randomly generated facial expressions shown to participants on a screen,” said lead author Jacob Tan, also of the Dyson School of Design Engineering. “This is the first time that participants were asked to perform the physical action which caused the simulated pain, allowing us to create dynamic simulation models.”

The students were asked to rate the appropriateness of the facial expressions from “strongly disagree” to “strongly agree,” and the researchers used these responses to find the most realistic order of AU activation.

“Current research in our lab is looking to determine the viability of these new robotics-based teaching techniques and, in the future, we hope to be able to significantly reduce underlying biases in medical students in under an hour of training,” said Dr. Thrishantha Nanayakkara, the director of Morph Lab.