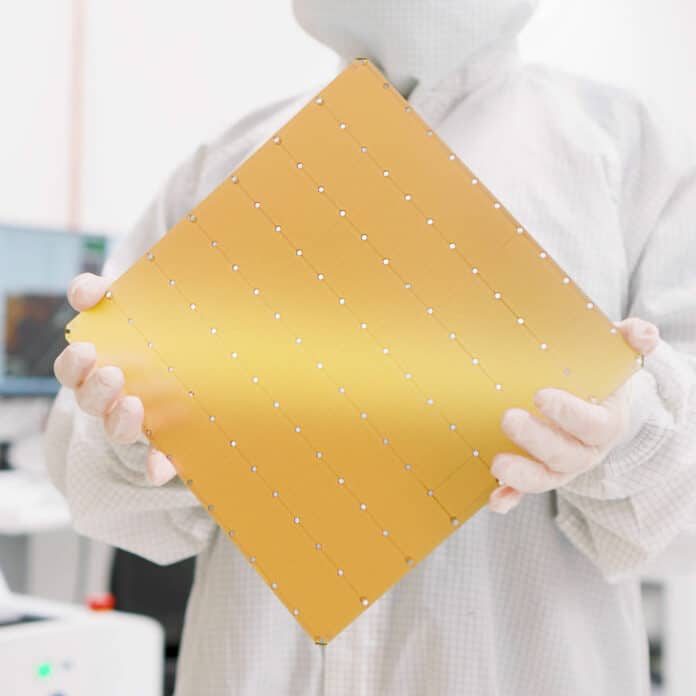

California-based Cerebras Systems has doubled down on its existing world record of the fastest AI chip with the introduction of the Wafer Scale Engine 3. This new chip delivers twice the performance of the Cerebras WSE-2, all while consuming the same amount of power and being priced the same.

The WSE-3 is built to train the largest AI models in the industry and has 4 trillion transistors on a 5nm-based design. It powers the Cerebras CS-3 AI supercomputer with 900,000 AI-optimized compute cores and can deliver 125 petaflops of peak AI performance.

AI models such as GPT have gained widespread attention due to their impressive capabilities. However, technology companies acknowledge that these models are still in their early stages of development and require further improvement to revolutionize the market fully.

In order to achieve this, AI models need to be trained on larger datasets, which, in turn, require even more powerful infrastructure. This has led to the rise of chip maker Nvidia, whose H200 offering is commercially available and widely used for training AI models, boasting 80 billion transistors. Despite this, Cerebras is aiming to push the boundaries even further with its WSE-3 chip, which is expected to deliver a 57-fold increase in performance.

The WSE-3 uses the 5 nm architecture and is designed to deliver 900,000 cores optimized for AI data processing when used in the CS-3 supercomputer. The CS-3 has 44GB on-chip SRAM and can store 24 trillion parameters in a single logical memory space, simplifying the training workflow and improving developer productivity.

The external memory on the CS-3 can be scaled up to 1.2 petabytes to train models ten times bigger than GPT-4 or Gemini. The CS-3 can be built for enterprise or hyperscale needs. In a four-system configuration, it can fine-tune AI models consisting of 70 billion daily parameters, and in a 2048-system configuration, it can train the 70 billion-parameter Llama model from scratch in a day.

The latest Cerebras Software Framework provides native support for PyTorch 2.0 and the latest AI models and techniques, such as multi-modal models, vision transformers, a mixture of experts, and diffusion. Cerebras is the only platform that provides native hardware acceleration for dynamic and unstructured sparsity, which can speed up training by up to 8x.

“WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from a mixture of experts to 24 trillion parameter models. We are thrilled to bring WSE-3 and CS-3 to market to help solve today’s biggest AI challenges,” said Andrew Feldman, CEO and co-founder of Cerebras.

The CS-3 system is optimized for AI work. It delivers more computing performance while taking up less space and using less power than any other system. It’s interesting to note that even though GPU power consumption doubles generation after generation, the CS-3 doubles performance while still staying within the same power envelope.

Another advantage of the CS-3 is its superior ease of use. It requires 97% less code than GPUs for LLMs and can train models ranging from 1B to 24T parameters with purely data parallel mode. What’s more, a standard implementation of a GPT-3 sized model required just 565 lines of code on Cerebras – an industry record.

The CS-3 will be playing a significant role in the strategic partnership between Cerebras and G42. The partnership has already delivered a massive amount of AI supercomputer performance with the Condor Galaxy 1 and Condor Galaxy 2, both of which are among the largest AI supercomputers in the world.

Recently, Cerebras and G42 announced that the construction of Condor Galaxy 3 is underway, which will be built with 64 CS-3 systems and produce 8 exaFLOPs of AI computing, making it one of the largest AI supercomputers in the world. This will be the third installation in the Condor Galaxy network, and the strategic partnership between Cerebras and G42 is set to deliver tens of exaFLOPs of AI computing. The Condor Galaxy has already trained some of the industry’s leading open-source models, including Jais-30B, Med42, Crystal-Coder-7B, and BTLM-3B-8K.

“Our strategic partnership with Cerebras has been instrumental in propelling innovation at G42 and will contribute to the acceleration of the AI revolution on a global scale,” said Kiril Evtimov, G42’s Group CTO. “Condor Galaxy 3, our next AI supercomputer boasting 8 exaFLOPs, is currently under construction and will soon bring our system’s total production of AI compute to 16 exaFLOPs.”