A standalone – unmanned – robotic vessel took the first sample of the seabed, without human intervention, using its robotic arm. This was confirmed by the US Woods Hole Oceanographic Institute (WHOI), which led the recent international mission to the Aegean with Greek participation.

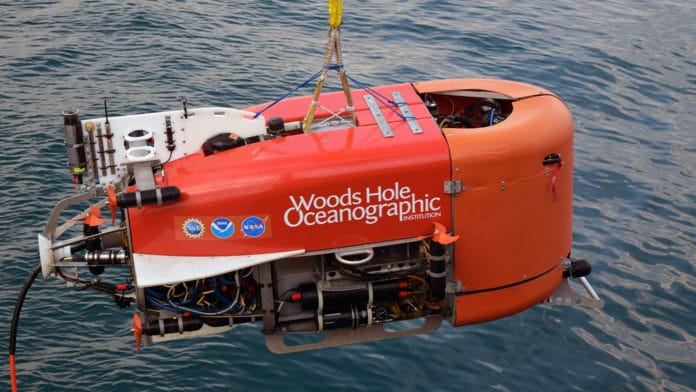

WHOI’s underwater robots, NUI (Nereid Under Ice), has managed to reach the mineral-rich soil of the Kolumbo volcano, an active submarine volcano off Greece’s famed Santorini island. This is the first known automated sample taken by the robot in the ocean. The sample will be used to better understand the microbial life that inhabits the volcano.

As mission head, Reich Camille said, “For a vehicle to take a sample without a pilot driving, it was a huge step forward. One of our goals was to toss out the joystick, and we were able to do just that.”

The mission, to explore Kolumbo volcano and to test autonomous underwater technologies, is part of the US Space Service’s interdisciplinary program, Planetary Science and Technology from Analog Research (PSTAR).

For the WHOI’s underwater robots, without a driver onboard, a computer algorithm was designed to handle the steering wheel. This situation is disturbing since we are talking about working in a difficult and dangerous environment, where any unforeseen event can end in a disaster.

The size of the NUI robot is similar to that of a Smart Car. It is equipped with Artificial Intelligence (AI)-based automated planning software, including a planner called “Spock,” which allowed the ROV to decide which sites to visit on the volcano and take Samples autonomously.

The technology they are working with is particularly important for future exploration missions of ocean worlds beyond our solar system. This level of automation will be important for NASA, as they seek to develop technologies to explore ocean worlds beyond our solar system.

Now they will continue to work on oceanic robot training to see how they can use “gaze tracking” technology and build a robust human language interface so scientists can talk directly to robots without a pilot intermediary.

Funding for this project was provided by a NASA PSTAR grant and a grant from the National Robotics Initiative of the National Science Foundation.