Quadruped robots have a more significant stability advantage than biped robots, especially during locomotion. Regarding robotic navigation and locomotion, most four-legged robots are trained to regain their footing if they stumble over an obstacle.

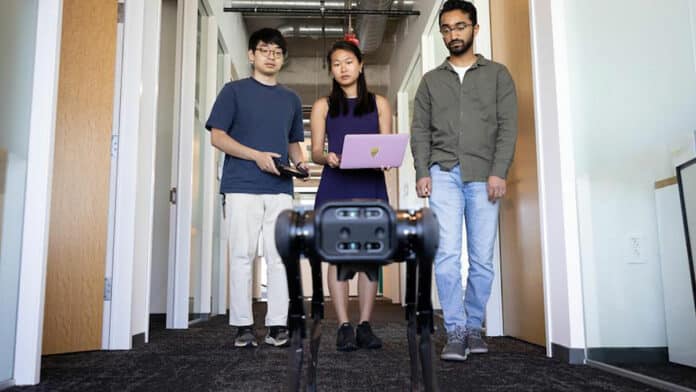

Researchers from the Georgia Institute of Technology have developed Visual Navigation and Locomotion (ViNL), visual input to avoid stepping completely over an obstacle.

This visual input enables a quadruped robot to navigate in an apartment unseen by stepping over small obstacles (e.g., shoes, toys, cables) in its path, similar to how humans and pets lift their feet on objects while walking.

ViNL has two different visual policies. The first policy is a visual locomotion policy that outputs linear and angular velocity commands that guide the robot to target coordinates in an unfamiliar indoor environment. Another policy is a visual locomotion policy that controls the robot’s joints to avoid stepping on obstacles while following the provided velocity commands.

Both policies are model-free, meaning the robot learns on its simulations, does not mimic any pre-existing behavioral patterns, and can be assembled without additional co-training. Both policies are trained independently in two completely different simulators and then seamlessly co-deployed by feeding velocity commands from the navigator to the locomotor, completely “zero-shot,” without any co-training.

“The main motivation of the project is getting low-level control over the legs of the robot that also incorporates visual input,” said Yokoyama, a Ph.D. student at the School of Electrical and Computer Engineering. “We envisioned a controller that could be deployed in an indoor setting with a lot of clutter, such as shoes or toys on the ground of a messy home. Whereas blind locomotive controllers tend to be more reactive — if they step on something, they’ll make sure they don’t fall over — we wanted ours to use visual input to avoid stepping on the obstacle altogether.”

“Normally, it matters much less how you get from Point A to Point B,” Joanne Truong, a Ph.D. student in the School of Interactive Computing, said. “You just need to know that Point B is valid. With overcoming obstacles, not only do Point A and Point B need to be valid, how you get from Point A to Point B also matters.”

ViNL aims to navigate the robot from one place to another, avoiding obstacles. The researchers taught the robot precisely what objects it should look to step over in a house, such as toys. Also, if an obstruction is too high, the robot can go around it. It also helps the robot understand how much height it needs to lift its legs. So far, ViNL has guided robots in simulated novel chaotic environments with a 72.6% success rate.

This novel training approach combining a high-level visual navigation strategy with a visual locomotion policy will provide the quadruped robot with better stability and help the robot continue walking without stumbling. In the future, after some advancements, this technology can help various robots walk without stumbling.

Journal reference:

- Simar Kareer, Naoki Yokoyama, Dhruv Batra, Sehoon Ha, Joanne Truong. ViNL: Visual Navigation and Locomotion Over Obstacles. International Conference on Robotics and Automation (ICRA), 2023.