Robotic systems are increasingly used in various domains, such as manufacturing, healthcare, agriculture, and defense. However, these systems also pose significant challenges and risks to human safety and security. Therefore, it is essential to ensure that robotic systems operate in a safe and secure manner, complying with ethical and legal standards.

Aiming to achieve the trusted operation of a military robotic vehicle under contested environments, artificial intelligence experts from Charles Sturt University and the University of South Australia (UniSA) have designed an algorithm that can intercept a man-in-the-middle (MitM) cyberattack on an unmanned military robot and shut it down in seconds.

The algorithm, which uses deep learning neural networks to simulate the behavior of the human brain, was trained to recognize the signature of a MitM eavesdropping cyberattack. This is where attackers interrupt an existing conversation or data transfer.

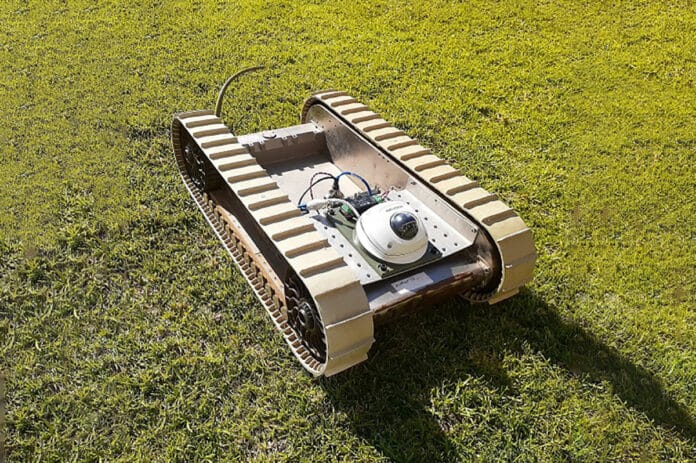

In tests conducted using a replica of a US Army combat ground vehicle, the algorithm was able to prevent 99% of malicious attacks, with a false positive rate of less than 2%. This demonstrates the effectiveness of the system, which could help improve security in military settings.

Researchers say the proposed algorithm performs better than other recognition techniques used around the world to detect cyberattacks. The research was conducted by Professor Anthony Finn and Dr Fendy Santoso from Charles Sturt Artificial Intelligence and Cyber Futures Institute in collaboration with the US Army Futures Command. They simulated a man-in-the-middle cyberattack on a GVT-BOT ground vehicle and trained its operating system to recognize an attack.

“The robot operating system (ROS) is extremely susceptible to data breaches and electronic hijacking because it is so highly networked,” Prof Finn says. “The advent of Industry 4, marked by the evolution in robotics, automation, and the Internet of Things, has demanded that robots work collaboratively, where sensors, actuators, and controllers need to communicate and exchange information with one another via cloud services.

“The downside of this is that it makes them highly vulnerable to cyberattacks. The good news, however, is that the speed of computing doubles every couple of years, and it is now possible to develop and implement sophisticated AI algorithms to guard systems against digital attacks.”

Despite its tremendous benefits and widespread usage, the robot operating system largely ignores security issues in its coding scheme due to encrypted network traffic data and limited integrity-checking capability.

“Owing to the benefits of deep learning, our intrusion detection framework is robust and highly accurate,” Dr. Santoso says in the press release. “The system can handle large datasets suitable to safeguard large-scale and real-time data-driven systems such as ROS.”

Professor Finn and Dr Santoso plan to test their intrusion detection algorithm on different robotic platforms, such as drones, whose dynamics are faster and more complex compared to a ground robot.