Visually impaired people could soon do without a cane and guide dog thanks to a new device developed by engineers at the University of Georgia in the United States. Designed by artificial intelligence (AI) developer Jagadish K. Mahendran and his team, an AI-powered, voice-activated backpack can help the visually impaired navigate and perceive the world around them. The system can track obstacles in real-time and describe the environment around a person.

The AI-powered backpack helps detect common challenges such as traffic signs, hanging obstacles, crosswalks, moving objects, and changing elevations, all while running on a low-power, interactive device.

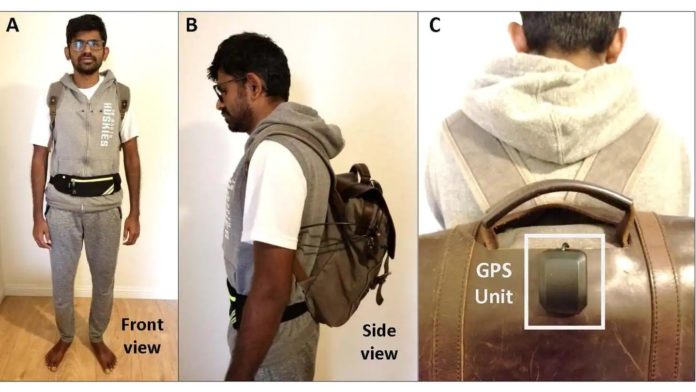

The system’s engineering team said hiding the electronics was the main goal so that users don’t look like cyborgs walking on the street. The visual aid system is housed inside a small backpack containing a host computing unit, such as a laptop, with an attached GPS device.

A vest jacket conceals a series of cameras – a single 4k camera that provides color information and a pair of stereoscopic cameras that map the depth of field. And a fanny pack is used to hold a pocket-size battery pack capable of providing approximately eight hours of use. A Luxonis OAK-D, spatial AI camera, can be affixed to either the vest or fanny pack, then connected to the computing unit in the backpack.

The OAK-D unit is a versatile and powerful AI device that runs on Intel Movidius VPU and the Intel Distribution of OpenVINO toolkit for on-chip edge AI inferencing. It is capable of running advanced neural networks while providing quick computer vision functions.

The processed visual data is then passed via Bluetooth to a pair of earphones, letting the user know what is around them. The user can interact with the system via voice queries and commands, and the system responds with verbal information. The system audibly conveys information about common obstacles, including signs, tree branches, and pedestrians. It also warns of upcoming crosswalks, curbs, staircases, and entryways.

The new system looks much more detailed and could potentially allow people with limited vision to navigate the world independently. It’s still in the early stage, but the team hopes to speed up the system by making the project non-commercial and open source. The system currently requires a computer, but we imagine that in the future, it could rely on a smartphone or perhaps one day be integrated into smart glasses.