Tech corporation IBM has unveiled a new analog AI chip prototype that demonstrates remarkable efficiency and accuracy in performing complex computations.

We’re just at the beginning of an AI revolution that will redefine how we live and work. One of the most groundbreaking technologies is deep neural networks (DNNs), which have revolutionized the field of AI. With the emergence of foundation models and generative AI, DNNs are gaining even more attention. However, traditional digital computing architectures are not equipped to handle the demands of these models, which limits their performance and energy efficiency.

IBM Research has been investigating ways to revolutionize the field of AI computation. One promising approach they are investigating is analog in-memory computing, or simply analog AI, to address the challenge by borrowing key features of how neural networks run in biological brains.

In order to turn the concept of analog AI into a reality, two key challenges need to be addressed: first, these memory arrays need to be able to compute with a level of precision that matches existing digital systems, and second, they must be able to interface seamlessly with other digital compute units and a digital communication fabric on the analog AI chip.

Now, IBM Research made a significant step towards addressing these challenges by introducing a state-of-the-art, mixed-signal analog AI chip for running a variety of deep neural network (DNN) inference tasks.

The company says it is the first analog chip that has been tested to be as adept at computer vision AI tasks as digital counterparts while being considerably more energy efficient.

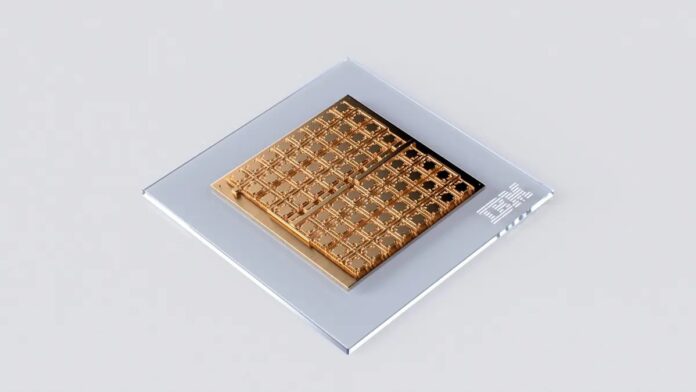

The chip comprises 64 analog in-memory compute (AIMC) cores, each containing a 256-by-256 crossbar array of synaptic unit cells. In each core (or tile), compact, time-based analog-to-digital converters are integrated to transition between the analog and digital worlds. Each tile is also integrated with lightweight digital processing units that perform simple nonlinear neuronal activation functions and scaling operations.

Each tile can perform the computations associated with a layer of a DNN model. In addition, a global digital processing unit is integrated in the middle of the chip for more complex operations critical for the execution of certain types of neural networks.

With this approach, the IBM team demonstrated near-software-equivalent inference accuracy (92.81%) with ResNet and long short-term memory networks. They also showed that analog in-memory computing can be seamlessly combined with several digital processing units and a digital communication fabric.

IBM also claims the measured matrix–vector multiplication throughput per area of 400 giga-operations per second per square millimeter (400 GOPS/mm2) of the chip is more than 15 times higher than previous multi-core, in-memory computing chips based on resistive memory while achieving comparable energy efficiency.