For many years, chip companies have tried to make computer brains (the processor) as small as possible. As a result, many computer chips, we have seen, are smaller than our fingernail.

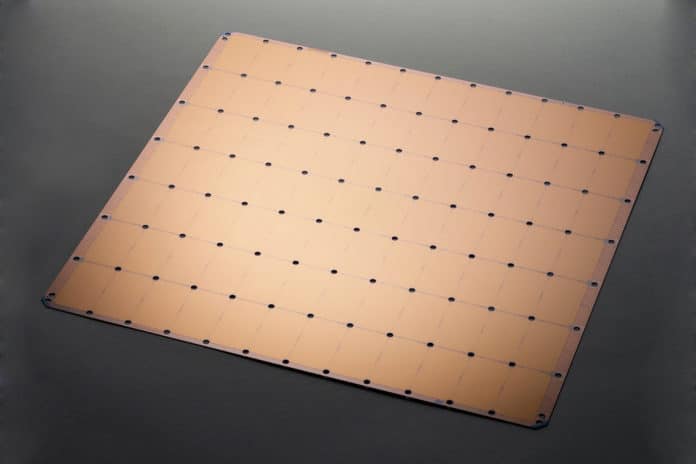

However, the Californian startup Cerebras Systems has different thinking. Last week, the company has turned everyone’s head towards it when it wrapped off the largest semiconductor chip ever built. Measuring roughly 8 inches by 8 inches (the size of an iPad), the chip is at least 50 times larger than similar chips available today.

The 46,225 square millimeter chip, dubbed the Wafer Scale Engine (WSE) chip, packs 1.2 trillion transistors, 400,000 computing cores and 18 gigabytes of on-chip memory. This makes it the biggest chip ever created.

The logic behind creating the bigger chip is quite simple. AI work is growing like crazy. But training a new model is both expensive and time-consuming, which often takes weeks or even months on currently-available systems.

According to Cerebras’ founder Andrew Feldman, artificial intelligence software requires huge amounts of information to improve, so processors need to be as fast as possible to crunch all this data. Bigger chips process more information more quickly. That’s where Cerebras new chip comes, it can help meet the enormous computational demands of deep learning and calculate their computationally heavy answer in less time.

The WSE is a single chip on a single wafer, allowing for much faster transfer speeds and processing capacity. The WSE chip is 56x larger than the largest Graphics Processing Unit (GPU), which is a mere 815 square millimeters, with 21.1 billion transistors.

The company claims that the WSE has 3000 times more high speed, on-chip memory, 10,000 times more memory bandwidth, and 33 times more fabric bandwidth than today’s leading GPU. This means that this giant chip can do more calculations, more efficiently, and dramatically reduce the time it takes to train an A.I. model.

Undoubtedly, the Cerebras computer chip is not one that’s intended for home use, as it’s so difficult to connect and cool such a huge piece of silicon. Instead, the product will be offered as part of a new server that will be installed in data centers where much of the heavy-duty processing behind today’s cloud-based A.I. tools is carried out.

There are no words yet on customers and when the hardware will be available for wider use.