Over the 50 years, robots have become very good at working in tightly controlled spaces, such as on car-assembly lines. But the world is not a predictable assembly line. Although humans might find interacting with the countless objects and environments found beyond factory gates a trivial task, it is tremendously difficult for robots.

Researchers across the world of robotics are working on making robots to be able to perform a variety of tasks in a human-like manner. In similar efforts, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have teamed up with a group at the Toyota Research Institute to work toward this important goal. They have designed a system that can grasp tools and apply the appropriate amount of force for a given task, like squeegeeing up liquid or writing out a word with a pen.

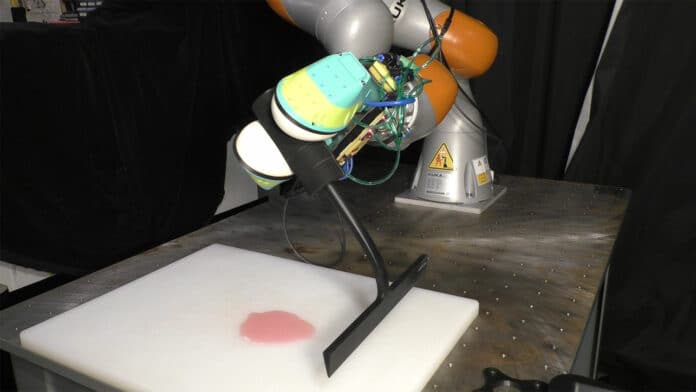

Called Series Elastic End Effectors (SEED), the system uses soft bubble grippers and embedded cameras to map how the grippers deform over a six-dimensional space and apply force to a tool. Using six degrees of freedom, the object can be moved left and right, up or down, back and forth, roll, pitch, and yaw. The bubble grippers employ a learning algorithm to exert precisely the right amount of force on a tool for its proper use. The closed-loop controller uses SEED and visuotactile feedback to adjust the position of the robot arm in order to apply the desired force.

With SEED, every execution the robot senses is a recent 3D image from the grippers, thereby tracking in real-time how the grippers are changing shape around an object. Researchers used these images to reconstruct the position of the tool, and the robot used a learned model to map the position of the tool to the measured force.

The learned model is obtained using the robot’s previous experience, where it disturbs a force torque sensor to figure out how stiff the bubble grippers are. Now, once the robot has sensed the force, it will compare that with the force that the user commands, researchers say. It would then move in the direction to increase the force, all done over 6D space. The research team implemented their system on a robotic arm to put it through its paces in a series of trials.

SEED was provided the right amount of force to wipe up some liquid on a plane during the squeegee task. The robot also effectively wrote out “MIT” using a pen and was able to apply the right amount of force to drive a screw. While SEED was aware of the fact that it needs to command the force or torque for a given task, if grasped too hard, the item would inevitably slip, so there’s an upper limit on that exerted hardness.

The system currently assumes a very specific geometry for the tools, it has to be cylindrical, and there are still many limitations on how it may generalize when it meets new types of shapes. Researchers are now working on generalizing the framework to different shapes so it can handle arbitrary tools in the wild.

Journal reference:

- H.J. Terry Suh, Naveen Kuppuswamy, Tao Pang, Paul Mitiguy, Alex Alspach, Russ Tedrake. SEED: Series Elastic End Effectors in 6D for visuotactile tool use. Arxiv, 2022; DOI: 10.48550/arXiv.2111.01376