Robotic manipulation of cloth has applications ranging from fabric manufacturing to handling blankets and laundry. Humans use their senses of sight and touch to grab a glass or pick up a piece of cloth. It is so routine that little thought goes into it. For robots, however, these tasks are extremely difficult. The amount of data gathered through touch is hard to quantify, and the sense has been hard to simulate in robotics – until recently.

New research from Carnegie Mellon University’s Robotics Institute (RI) can help robots feel layers of cloth rather than relying on computer vision tools to only see it. The work could one day allow robots to assist people with household tasks like folding laundry.

To fold laundry, robots need a sensor to mimic the way a human’s fingers can feel the top layer of a towel or shirt and grasp the layers beneath it. Researchers could teach a robot to feel the top layer of cloth and grasp it, but without the robot sensing the other layers of cloth, the robot would only ever grab the top layer and never successfully fold the cloth.

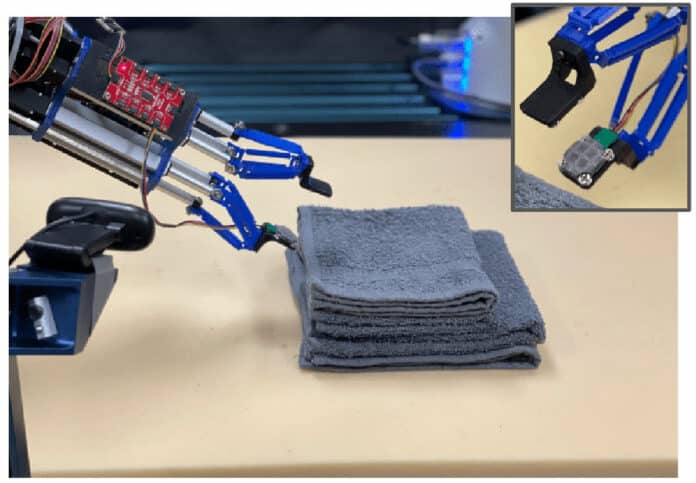

The ideal solution is ReSkin, developed by researchers at Carnegie Mellon and Meta AI. This open-source touch-sensing skin is made of a thin, elastic polymer embedded with magnetic particles to measure three-axis tactile signals. In their latest study, researchers used ReSkin to help the robot feel layers of cloth rather than relying on its vision sensors to see them.

“By reading the changes in the magnetic fields from depressions or movement of the skin, we can achieve tactile sensing,” said Thomas Weng, a Ph.D. student in the R-Pad Lab, who worked on the project with RI postdoc Daniel Seita and grad student Sashank Tirumala. “We can use this tactile sensing to determine how many layers of cloth we’ve picked up by pinching with the sensor.”

Other researchers used tactile sensing to grab rigid objects, but cloth is deformable, meaning it changes when you touch it. This makes the mask even more difficult. Adjusting the robot’s grasp on the cloth changes both its pose and the sensor readings. Researchers didn’t teach the robot how to grasp the fabric. Instead, they taught it how many layers of fabric it was grasping by first estimating how many layers it was holding using the sensors in ReSkin, then adjusting the grip to try again.

They evaluated the robot picking up both one or two layers of cloth and used different textures and colors of cloth to demonstrate generalization beyond the training data. The thinness and flexibility of the ReSkin sensor made it possible to teach the robots how to handle something as delicate as layers of cloth.

“The profile of this sensor is so small, we were able to do this very fine task, inserting it between cloth layers, which we can’t do with other sensors, particularly optical-based sensors,” Weng said. “We were able to put it to use to do tasks that were not achievable before.”

There is still plenty of research to be done before handling the laundry basket over to a robot.

Journal reference:

- Sashank Tirumala, Thomas Weng, Daniel Seita, Oliver Kroemer, Zeynep Temel, and David Held. Learning to Singulate Layers of Cloth based on Tactile Feedback. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022. DOI: 10.48550/arXiv.2207.11196