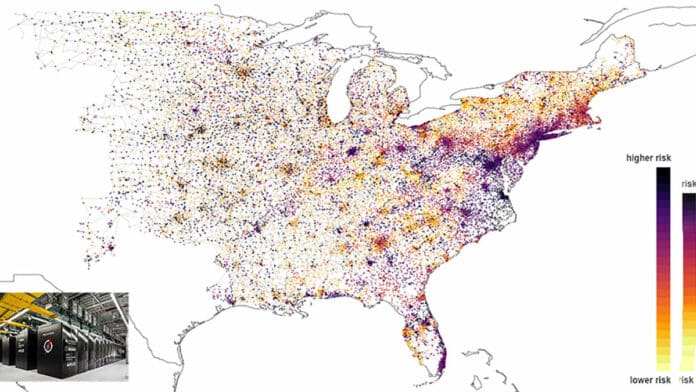

It is of utmost importance to ensure that our electrical power grid can function smoothly even during natural disasters, severe weather conditions, and attacks. This is a crucial national security challenge that needs to be addressed proactively. However, keeping the grid running effectively can be even more challenging with the increasing amount of renewable energy sources such as solar and wind being added to the grid.

To develop more efficient grid-control strategies under emergency scenarios, a team of experts from multiple institutions has developed software capable of optimizing the grid’s response to potential disruption events under different weather scenarios on Oak Ridge National Laboratory (ORNL) ‘s Frontier exascale supercomputer. Frontier recently achieved a milestone of running at exascale speeds of more than one quintillion calculations per second.

The team, including researchers from LLNL, ORNL, the National Renewable Energy Laboratory (NREL), and the Pacific Northwest National Laboratory, was assembled at LLNL in Livermore, California, for the Exascale Computing Project’s ExaSGD project.

The software, called HiOp, ran the largest simulation of its kind to date to optimize the power grid’s response to potential disruption events under different weather scenarios. To test its ability, researchers ran HiOp on 9,000 nodes of the Frontier machine. In a 20-minute process, it was able to help researchers to determine safe and cost-optimal power grid setpoints over 100,000 possible grid failures and weather scenarios.

The project emphasized security-constrained optimal power flow, a reflection of the real-world voltage and frequency restrictions the grid must operate within to remain safe and reliable.

“Because the list of potential power grid failures is large, this problem is very computationally demanding,” said computational mathematician and principal investigator for LLNL Cosmin Petra. “The goal of this project was to show that the exascale computers are capable of exhaustively solving this problem in a manner that is consistent with current practices that power grid operators have.”

Currently, a human operator is required to respond to grid failures. He may or may not be able to determine how to optimally keep the grid up and running under different renewable energy forecasts.

For comparison, system operators using commodity computing hardware typically consider only about 50 to 100 hand-picked contingencies and 5-10 weather scenarios.

“This computational problem may become even more relevant in the future, in the context of extreme climate events,” Petra said in the press release. “We could use the software stack that ran on Frontier to minimize disruptions caused by hurricanes or wildfires or to engineer the grid to be more resilient in the longer run under such scenarios, just to give an example.”

The latest version of HiOp contains several performance improvements and new linear algebra compression techniques that helped improve the speed of the open-source software by a factor of 100 on the exascale machine’s GPUs over the course of the project.

The team was able to utilize these GPUs increasingly better, surpassing CPUs in many instances, and that was quite an achievement given the sparse, graph-like nature of their computations. The Frontier runs were validated by colleagues at PNNL using industry-standard tools. This showed that the computed pre-contingency power setpoints drastically reduce the post-contingency outages with minimal increase in the operation cost.

Since the optimization software stack is open-source and lightweight, grid system operators could downsize the technology and incorporate it into their current practices on commodity HPC systems in a cost-effective manner.