Many content creators these days make video tutorials an effective medium for teaching physical skills such as cooking or prototyping electronics. However, making videos is not easy; sometimes, the subject goes in and out of focus, the movements in the video look choppy and shaky, and the sound quality could be better. Instructors who do not have access to cameraperson assistance often have to work within the confines of static cameras.

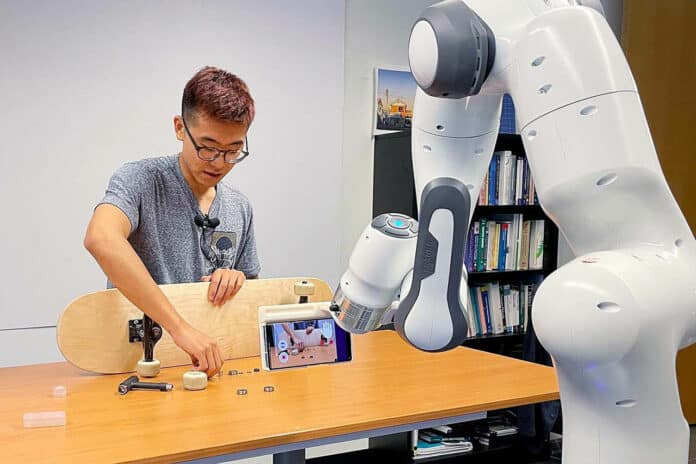

To overcome these problems, University of Toronto researchers have developed an interactive camera robot called Stargazer. This camera robot can help university teachers and content creators create engaging tutorial videos demonstrating physical skills. Stargazer can capture dynamic instructional video and overcome the difficulties of working with static cameras.

Stargazer uses a single camera on a robot arm with seven independent motors that can autonomously move with the video subject to track the region of interest. Subtle cues from an instructor can adjust the camera robot’s behavior. The cues can be body movements, gestures, and speech detected by the prototype’s sensors.

Instructor cues may include head position, torso orientation, hand position, hand gestures, hand raisings, and speech, such as suggesting tight framing and high angles.

According to the researchers, these camera control commands are naturally used by instructors to get their audience’s attention and do not interfere with instructional delivery.

For example, an instructor can adjust its view to point to each of Stargazer’s tools during a tutorial, prompting the camera to pan around and point to each one. The instructor can also tell the viewer, “If you saw how I dropped the ‘A’ into the ‘B’ from above,” the Stargazer will respond by framing the action from a higher angle to give the audience a better view.

The instructor’s voice is recorded by a wireless microphone and sent to Microsoft Azure speech-to-text, speech-recognition software. The transcribed text and custom prompts are then forwarded to the GPT-3 program, which labels the instructor’s intent for the camera, such as standard versus high angle and normal versus tight framing.

“The goal is to have the robot understand in real time what kind of shot the instructor wants,” says Jiannan Li, a Ph.D. candidate in U of T’s Department of computer science in the Faculty of Arts & Science. “The important part of this goal is that we want these vocabularies to be non-disruptive. It should feel like they fit into the tutorial.”

Stargazer’s capabilities were tested in a study involving six instructors, each teaching a different skill to create dynamic tutorial videos.

Participants were given one practice session each and completed their tutorial in two takes. The researchers reported that all participants could produce videos without needing any additional controls than what was provided by the robotic camera and were satisfied with the quality of the produced videos.

While Stargazer’s range of camera positions is sufficient for tabletop activities, the researchers’ team is exploring the potential of camera drones and robots on wheels to assist in filming tasks from various angles in large environments.

This robot can help content creators make attractive videos with excellent audio quality that look smooth. The camera robot’s ability to recognize and react to cues can be expanded in the future and used in various robot applications.

Journal reference:

- Jiannan Li, Maurício Sousa, Karthik Mahadevan, Bryan Wang, Paula Akemi Aoyagui, Nicole Yu, Angela Yang, Ravin Balakrishnan, Anthony Tang, Tovi Grossman. Stargazer: An Interactive Camera Robot for Capturing How-To Videos Based on Subtle Instructor Cues. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, 2023; DOI: 10.1145/3544548.3580896