With the help of machine learning, electronic devices – including electronic gloves and electronic skins – can track the movement of human hands and perform tasks such as object and gesture recognition. However, such devices require multiple sensor components to read each joint of the finger, making them bulky. They also lack the ability to adapt to the curvature of the body. Furthermore, existing models for signal processing require large amounts of labeled data for recognizing individual tass for every user.

Now, researchers at Stanford University have developed a new smart skin that might foretell a day when people type an invisible keyboard, identify objects by touch alone, or allow users to communicate by hand gestures with apps in immersive environments. The new stretchable biocompatible material gets sprayed on the back of the hand. Thanks to a tiny electrical network integrated into the mesh that senses as the skin stretches and bends and, using AI, researchers can interpret myriad daily tasks from hand motions and gestures.

The new practical approach is both lean enough in form and adaptable enough in function to work for essentially any user – even with limited data.

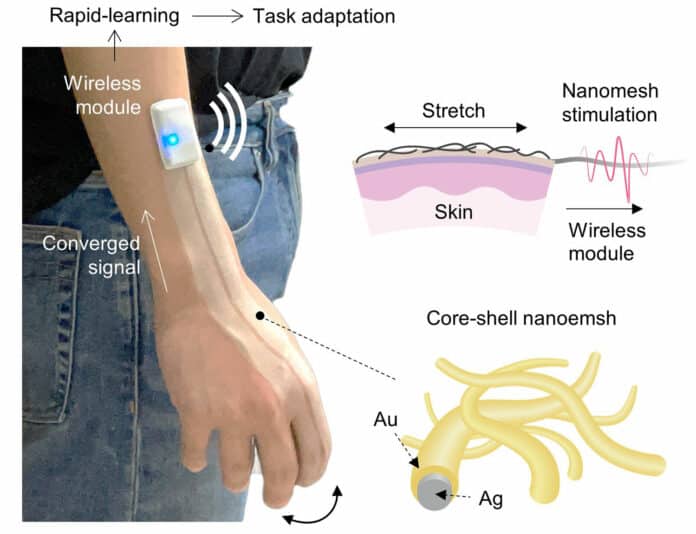

The spray-on sensory system consists of a printed, bio-compatible nanomesh network embedded in polyurethane. The mesh is comprised of nanowires of silver coated with gold that are in contact with each other to form dynamic electrical pathways. This mesh is electrically active, biocompatible, breathable, and stays on unless rubbed in soap and water. It conforms intimately to the wrinkles and folds of each human finger that wears it. Then a lightweight Bluetooth module can be simply attached to the mesh, which can wirelessly transfer the signal changes.

“As the fingers bend and twist, the nanowires in the mesh get squeezed together and stretched apart, changing the electrical conductivity of the mesh. These changes can be measured and analyzed to tell us precisely how a hand or a finger or a joint is moving,” explained Zhenan Bao, a K.K. Lee Professor of Chemical Engineering and senior author of the study.

The spray-on nature of the smart skin allows it to conform to any size or shaped hand but opens the possibility that the device could be adapted to the face to capture subtle emotional cues. That might enable new approaches to computer animation or lead to new avatar-led virtual meetings with more realistic facial expressions and hand gestures.

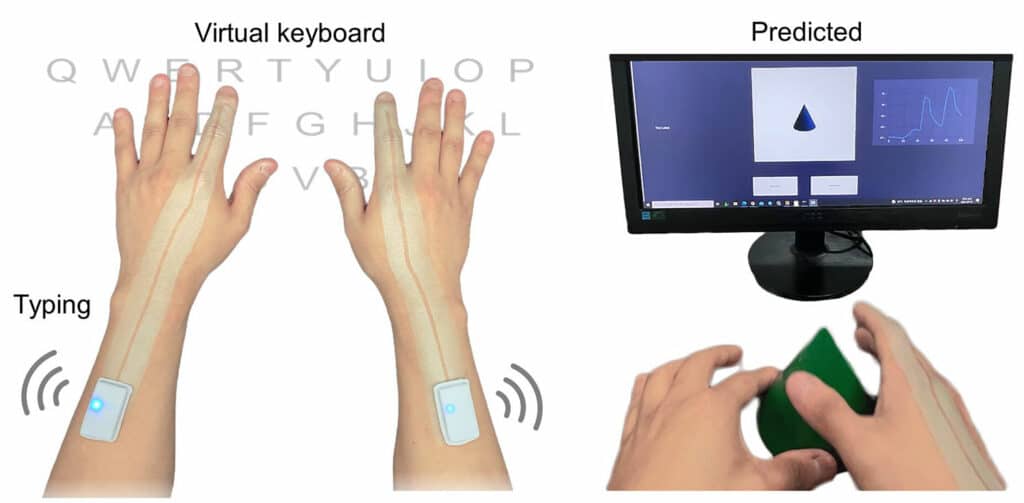

Machine learning then takes over. Computers monitor the changing patterns in conductivity and map those changes to specific physical tasks and gestures. For instance, type an X on a keyboard, and the algorithm learns to recognize that task from the changing patterns in the electrical conductivity. Once the algorithm is suitably trained, the physical keyboard is no longer necessary. The same principles can be used to recognize sign language or even to recognize objects by tracing their exterior surfaces.

The Stanford team has developed a learning scheme that is far more computationally efficient. “We brought the aspects of human learning that rapidly adapt to tasks with only a handful of trials known as ‘meta-learning.’ This allows the device to rapidly recognize arbitrary new hand tasks and users with a few quick trials,” said Kyun Kyu “Richard” Kim, a post-doctoral scholar in Bao’s lab, who is the first author of the study.

The researchers have built a prototype that recognizes simple objects by touch and can even do predictive two-handed typing on an invisible keyboard. During the lab tests, the algorithm was able to type “No legacy is so rich as honesty” from William Shakespeare and “I am the master of my fate, I am the captain of my soul” from William Ernest Henley’s poem “Invictus.”

The researchers say it could have applications and implications in fields as far-ranging as gaming, sports, telemedicine, and robotics.

Journal reference:

- Kyun Kyu Kim, Min Kim, Kyungrok Pyun, Jin Kim, Jinki Min, Seunghun Koh, Samuel E. Root, Jaewon Kim, Bao-Nguyen T. Nguyen, Yuya Nishio, Seonggeun Han, Joonhwa Choi, C-Yoon Kim, Jeffrey B.-H. Tok, Sungho Jo, Seung Hwan Ko and Zhenan Bao. A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition. Nature Electronics, 2022; DOI: 10.1038/s41928-022-00888-7