Technology to restore the ability to communicate in paralyzed persons who cannot speak has the potential to improve autonomy and quality of life. It could really help them lead a more or less normal life.

Now, researchers at the University of California, San Francisco, have successfully developed a “speech neuroprosthesis” that allows people with paralysis to communicate even if they are unable to speak on their own. The technology has enabled a man with severe paralysis to communicate in sentences, translating signals from his brain to the vocal tract directly into words that appear as text on a screen.

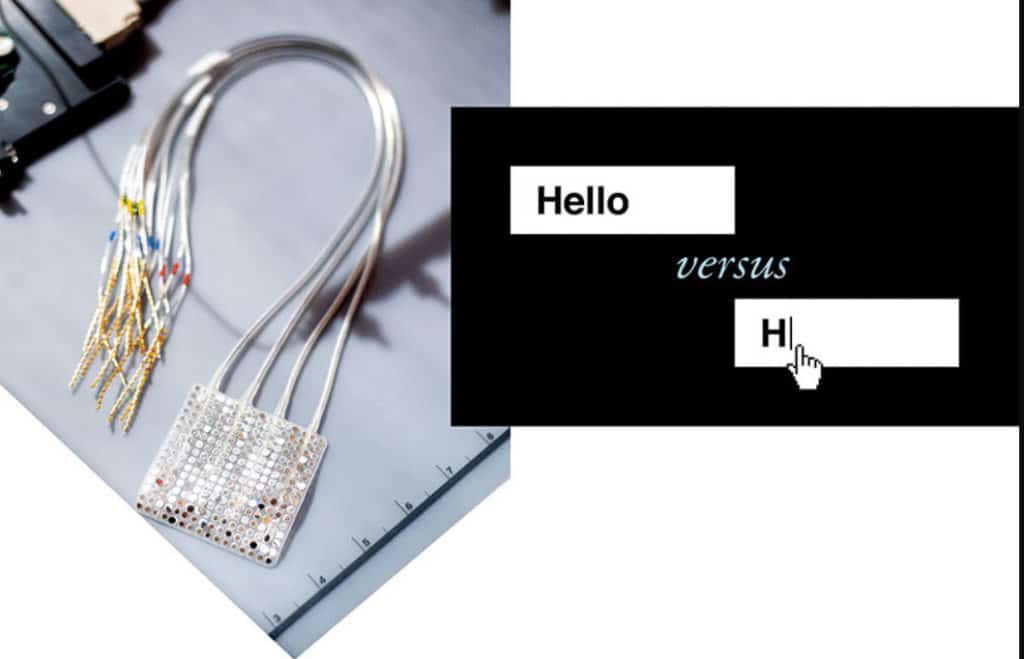

The first participant in the trial was a man in his late 30s who suffered a devastating brainstem stroke more than 15 years ago that severely damaged the connection between his brain and his vocal tract and limbs. The team implanted a high-density electrode array designed to detect signals from speech-related sensory and motor processes linked to the mouth, lips, jaw, tongue, and larynx.

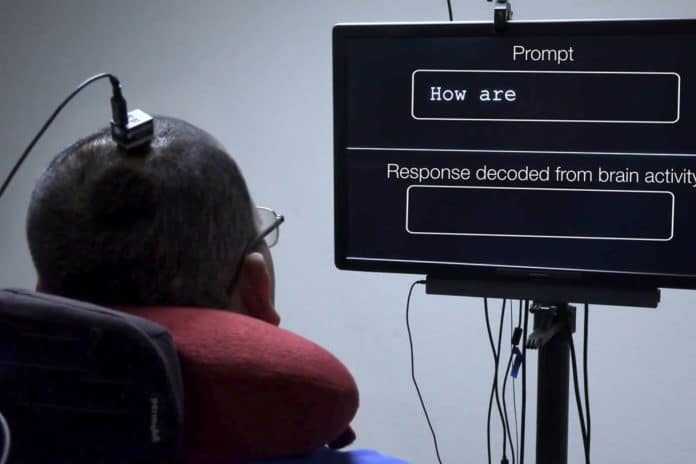

The brain implant was connected to a computer via a cable attached to his head, and he was asked to try to say common words such as “water,” “family,” and “good.” In doing so, the electrodes transmitted signals using advanced computer algorithms that recognized the intended words. Over the course of over 48 sessions, the team recorded 22 hours of cortical activity. The participant, who asked to be referred to as BRAVO1, attempted to say each of the 50 vocabulary words many times in each session while the electrodes recorded brain signals from his speech cortex.

UCSF neurosurgeon Edward Chang and his team used deep-learning algorithms to create computational models to detect and classify words from patterns in the recorded cortical activity. To test their approach, the team first presented BRAVO1 with short sentences constructed from the 50 vocabulary words and asked him to try saying them several times. As he made his attempts, the words were decoded from his brain activity, one by one, on a screen.

Then the team switched to prompting him with questions such as “How are you today?” and “Would you like some water?” As before, BRAVO1’s attempted speech appeared on the screen. “I am very good,” and “No, I am not thirsty.”

The speech neuroprosthesis electrodes do not read the mind but detect brain signals corresponding to each word the patient tries to say. Through them, BRAVO1 communicated 15 to 18 words per minute with up to 93% accuracy. Chang claims that faster decoding is possible, although it’s unclear whether it will approach the pace of a typical conversation – about 150 words per minute – that requires years of study improvement.

Contributing to the success was a language model developed by David Moses, Ph.D., a postdoctoral engineer in the Chang lab. The model implemented an “auto-correct” function, similar to what is used by consumer texting and speech recognition software.

Looking forward, Chang and Moses said they would expand the trial to include more participants affected by severe paralysis and communication deficits. The team is currently working to increase the number of words in the available vocabulary and improve the rate of speech.