Undoubtedly, artificial intelligence (AI) is taking surveillance technology to greater heights. Using machine learning capabilities, cameras can now detect things, humans, or any trouble without human support. These cameras have improved the efficiency and accuracy of the human detection system at airports, railway stations, and other sensitive public places.

Want to be undetected by these AI-powered surveillance cameras?

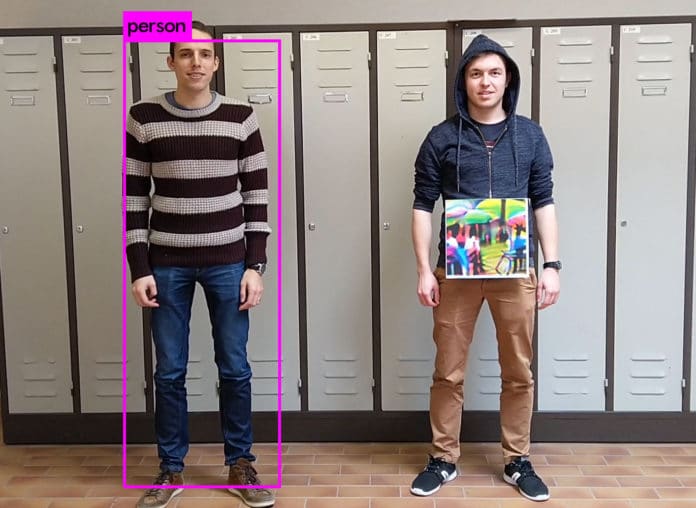

Three machine learning researchers from the University of KU Leuven in Belgium found a way to confuse an AI system. They developed a small, square, colorful printed patch That can be used as a cloaking device for hiding people from object detectors. They called this patch an “adversarial patch”.

This 40 sq. cm printed patch can remarkably lower the accuracy of an object detector. The researchers named Simen Thys, Wiebe Van Ranst, and Toon Goedemé have published their paper to the arXiv preprint server.

The team has also uploaded a video on YouTube where they demonstrated how the person holding the patch is ignored or remains undetected by the AI cameras. In contrast, the person without the patch is successfully detected. And when the patch is flipped to its blank side, the camera again detects the persons’ presence.

How does it all happen?

The AI system is trained for recognizing different objects and persons by showing it thousands of objects that fit into given categories. But, recent research appears that human-spotting AI systems can be fooled by images placed in front of their forms.

The KU Leuven researchers write, “Creating adversarial attacks for person detection is more challenging because human appearance has many more variations than for example stop signs.”

They used the YOLOv2 object detector for testing, a popular fully-convolutional neural network model. The team created several types of images, and then they tested them with the AI system until they found one that worked remarkably well. The image we can see in the video is an image of people holding colorful umbrellas that had been altered by rotating them and adding noise.

Also, it was necessary to hold the photograph in a position that occupied the box that the AI system constructed to determine if a given object was identifiable. The researchers found the patches worked “quite well” as long as they are positioned correctly.

“From our results, we can see that our system is able significantly lower the accuracy of a person detector…In most cases, our patch is able to successfully hide the person from the detector. Where this is not the case, the patch is not aligned to the center of the person,” the researchers said.