Intel is investing an enormous amount of resources in solutions to accelerate calculation related to artificial intelligence. The result of these investments are new solutions from the Nervana family presented recently, which are to ensure a corresponding increase in performance in complex applications that require a lot of computing power.

The American company presented two Intel Nervana Neural Network Processors (NNP) – the NNP-T1000 for machine learning and the NNP-I1000 system for calculations related to inference. These are the first Intel ASICs developed specifically for complex deep learning, with which the company aims to offer high levels of scalability and efficiency for the data center and cloud sector.

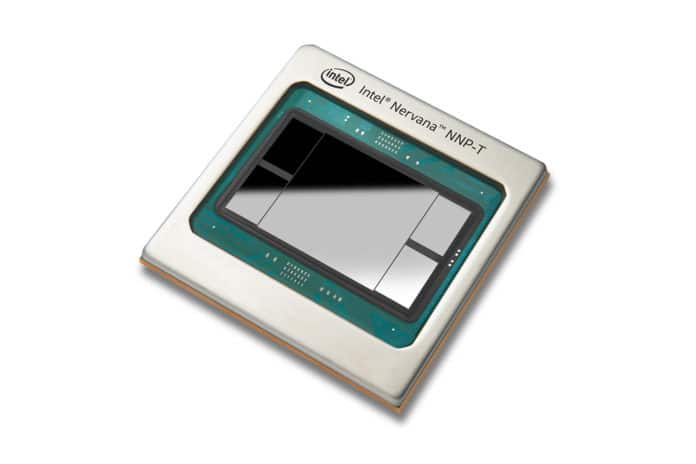

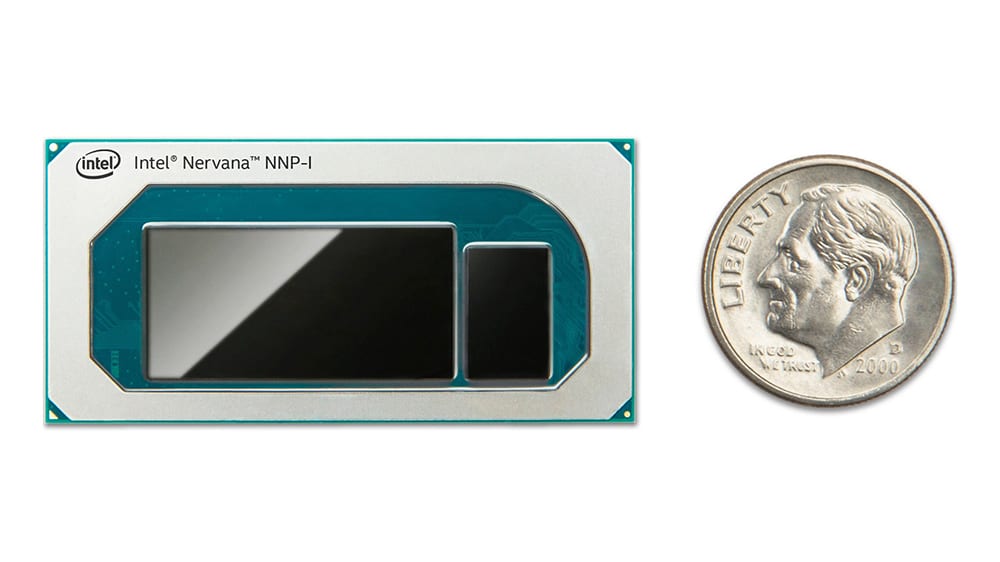

The Intel Nervana NNP-T – one of which you can see in the image above – is a solution that offers a good balance between computing, communication, and memory, allowing for almost linear scalability and energy efficiency from small clusters to supercomputers. On the other hand, the Intel Nervana NNP-I – compared to a coin in the image below – is power- and budget-efficient and is ideal for performing intensive multimodal inferences on a real scale, using flexible form factors.

Together with the new processors, Intel has also unveiled a next-generation Movidius Myriad Vision Processing Unit (VPU) for multimedia applications, computer vision, and edge inference. This will include architectural renovations aimed at offering inference performance over ten times higher than the previous generation and with energy efficiency up to 6 times higher than the competitive processors. The equipment will be available in the first half of 2020.

The company points out that the more advanced needs of deep learning training by its customers – such as Facebook and Baidu – require performance to double every three and a half months, which is why this big performance increase reported by Intel is not surprising. In fact, a representative of Facebook has already confirmed that NNP-I will be used in their centers.

Finally, the company also announced the new Intel DevCloud for the Edge, which, along with the Intel Distribution of the OpenVINO toolkit, will allow developers to experiment, prototype, and test artificial intelligence solutions on a wide range of Intel processors before proceed to purchase the hardware.