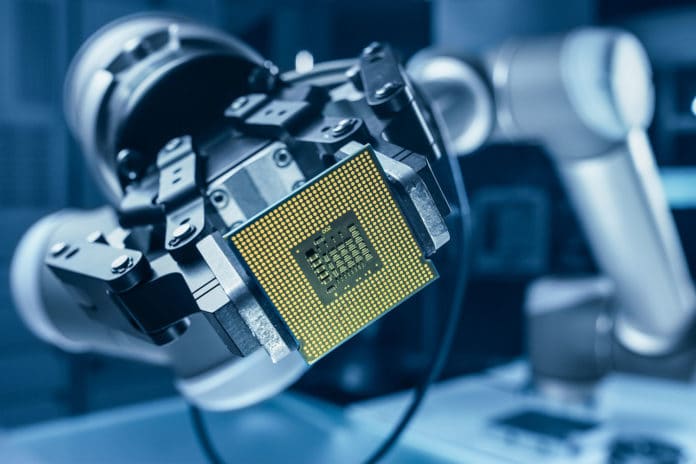

Success or failure in designing microchips depends heavily on steps known as floorplanning and placement. Despite five decades of research, chip floorplanning has defied automation, requiring months of intense effort by physical design engineers to produce manufacturable layouts.

Now researchers from Google are using artificial intelligence (AI) to more quickly design its next generation of machine learning chips. According to Google engineers, the algorithm’s designs are comparable or even superior to those created by human experts in all key details, including performance, energy consumption, and chip area.

The company claims that work that takes months for humans can be accomplished by AI in under six hours. This could save thousands of hours of human effort for each generation of microchips.

Google has been developing machine learning algorithms to create chips for years. Now it seems that this recent effort, described in a paper in the journal Nature, to be applied to a commercial product: an upcoming version of Google’s own TPU (Tensor Processing Unit) chips, which is used mainly in the company’s cloud-based machine-learning applications.

In the paper, Google’s engineers note that this new research has major implications for the chip industry, unlocking opportunities for co-optimization with earlier stages of the chip design process. It will allow companies to explore possible architectures for future projects more quickly and make it easier to customize chips for specific workloads.

The Nature editors noted that this is an important achievement, and the study could help overcome the predicted end of Moore’s Law, which states that the number of transistors per chip doubles roughly every two years as transistors become smaller. A neural network developed by Google will not necessarily solve the physical challenges of squeezing more and more transistors onto chips. Still, it could help find other paths to increasing performance at the same rate.

Floorplanning usually requires expert human designers who work with the aid of computer tools to find the optimal layout on a silicon die for a chip’s sub-systems. Essentially, it’s figuring out where components like CPU and GPU cores and memory are placed relative to each other on the chip. Their placement on these tiny boards is important because it affects the chip’s power consumption and processing speed. Designing floorplans takes months of intense effort for humans, but from a machine learning perspective, there is a familiar way to tackle this problem – as a game.

To do this, Google researchers came up with the idea of organizing chip design like a game in the eyes of AIs. So, instead of a game board, you have silicon die. Instead of parts like pawns and knights, you have components like CPUs and GPUs. Here, the objective is, therefore, to find the best possible arrangement in order to obtain optimal computational efficiency.

Next, the engineers trained the reinforcement learning algorithm on a dataset of 10,000 chip floor plans of varying quality, some of which were generated randomly. The software then worked out how to generate floorplans that used no more space, wire, and electric power than did those designed by engineers. The algorithm then used this data to distinguish between good and bad floorplans and generate its own designs in turn.

Google has already adopted this system and plans to use it to reduce production costs and produce more efficient chips.